LLM ABCs: “Building LLM-Powered Applications” — A Gateway to AI’s Future

In the fast-evolving world of technology, Large Language Models (LLMs) like GPT-3 have become the cornerstone of AI research and application development. With their ability to understand and generate human-like text, LLMs are not just tools of the future but are already shaping our present. This guide delves into how to effectively construct applications powered by these models, exploring both the theoretical bedrock and practical implementations that will define the next wave of innovations in the tech landscape.

Before diving deep into application building, it’s crucial to grasp what LLMs are and why they matter:

– Definition and Function: LLMs are advanced AI algorithms trained on vast datasets of human language. They can generate text, understand context, and even perform specific tasks like summarizing long articles or answering questions.

– Applications: From chatbots to content generators, LLMs are versatile. They power complex applications in sectors ranging from healthcare to finance, making them instrumental in driving business efficiency and innovation.

Identifying the problem or need your application will address is the first step. Whether enhancing customer service through a conversational AI or automating content creation, clarity in purpose sets the direction for development.

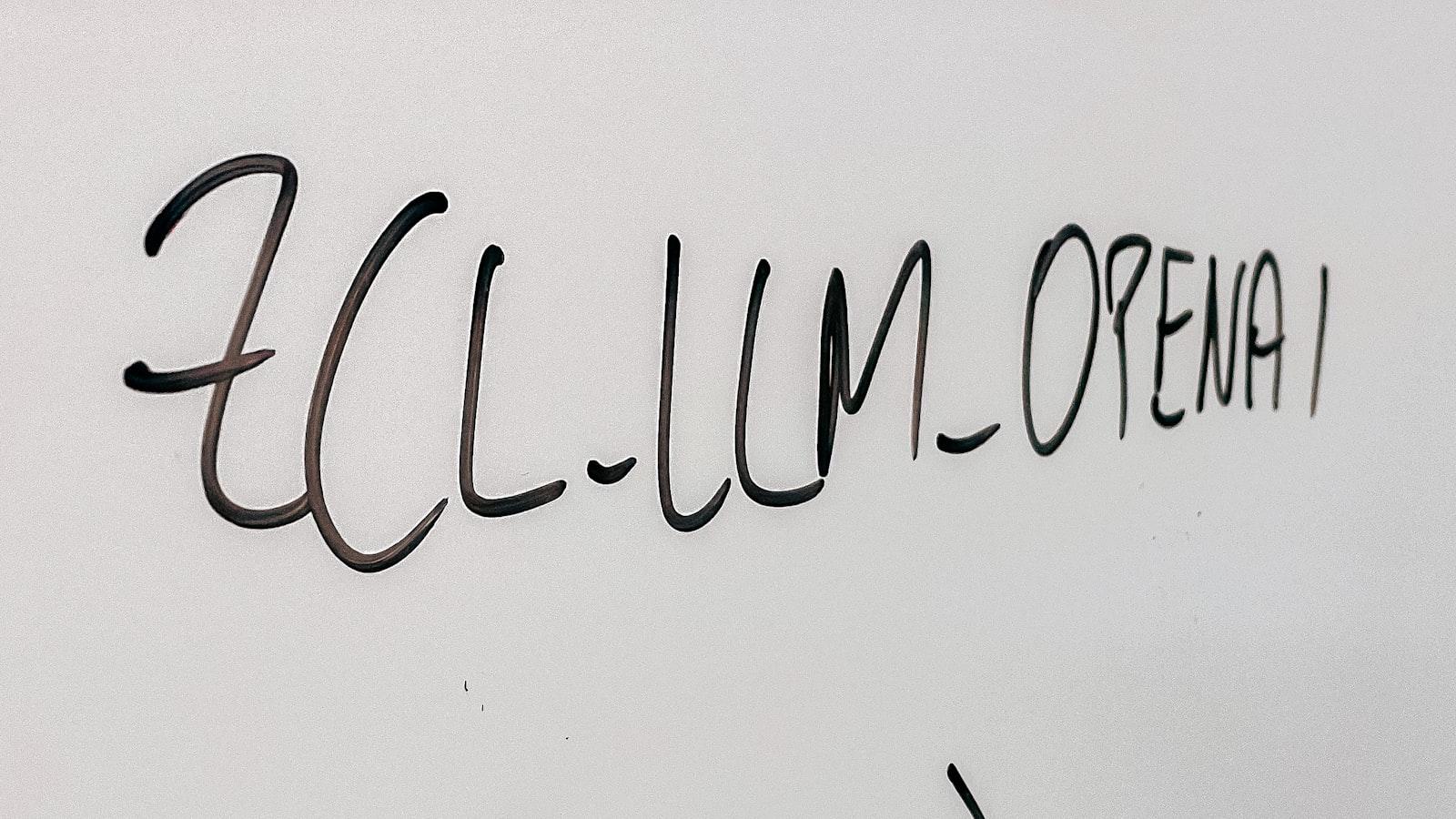

Not all LLMs are built the same. Selecting a model that suits your application’s needs depends on factors like complexity, linguistic nuance, and scalability.

A robust dataset is critical for training your model effectively. This phase might involve data scraping, purchasing datasets, or leveraging existing data repositories. Training your model with a high-quality, relevant dataset ensures higher accuracy and efficiency.

This is the coding phase where developers integrate the LLM into the application. This might involve setting up APIs, coding the logic for user interactions, and ensuring the LLM can handle real-world queries.

After implementation, extensive testing is necessary. This helps in identifying bugs and opportunities for optimization. Feedback loops can significantly refine LLM behaviors.

Once the application passes testing phases, it’s ready for deployment. However, ongoing maintenance is vital, as LLMs can evolve with more data and changing user interactions.

| Advantage | Explanation |

|---|---|

| Enhanced User Experience | LLMs can interact in a human-like manner increasing user engagement and satisfaction. |

| Scalability | Applications powered by LLMs can handle scaling up operations efficiently without a drop in performance. |

| Cost Efficiency | Automating tasks with LLMs reduces the long-term costs associated with manual operations. |

Imagine a retail company integrating an LLM-powered chatbot to handle customer queries. This not only resulted in a 40% reduction in customer wait times but also provided insights into common customer issues, demonstrating the practical benefits of adopting such AI-driven solutions.

As technology continues to advance, the importance of understanding and integrating AI capabilities, particularly those offered by LLMs, cannot be overstated. Building LLM-powered applications might be complex, but the benefits they bring to efficiency, scalability, and user experience are invaluable. Entering the era of generative AI means recognizing every error as an opportunity for innovation. The time to harness these capabilities is now, and it promises to pave the way for a future where AI and human ingenuity coexist seamlessly.

To discover deeper insights and further explore AI applications, click on the link below: