Introduction

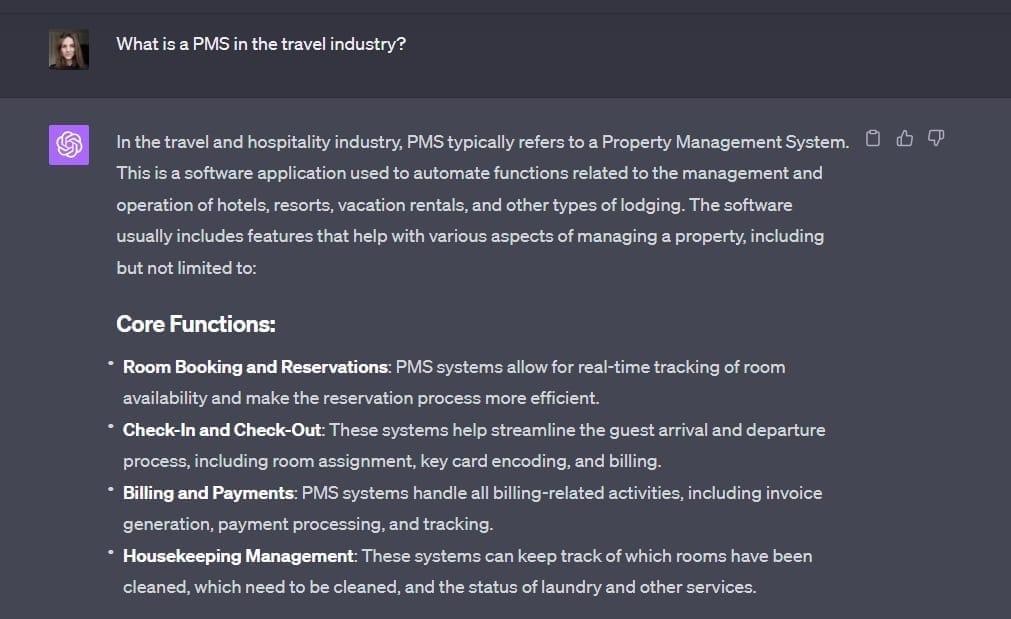

Have you ever felt like you’re speaking a different language when trying to communicate with AI language models? You’re not alone. Crafting the perfect prompt to elicit the desired response from a Large Language Model (LLM) can sometimes feel like navigating through a maze blindfolded. But what if I told you that there’s a way to turn this chaos into clarity? Enter LangChain, a game-changing tool that’s been making waves in the world of prompt engineering.

In this article, titled ”From Chaos to Clarity: How I Improved LLM Output Consistency with LangChain,” we’re going to dive deep into the art and science of prompt engineering. Whether you’re a developer, a content creator, a business professional, or just someone fascinated by the potential of AI, this piece is crafted with you in mind. Our journey will explore the nuts and bolts of constructing effective prompts that not only communicate your intent to the AI but also significantly improve the consistency and quality of its outputs.

Why Prompt Engineering Matters

In the rapidly evolving landscape of AI, understanding how to interact with language models effectively is no longer optional—it’s essential. The right prompt can mean the difference between getting a response that’s spot-on or one that misses the mark entirely. That’s where prompt engineering comes in. It’s the skill of designing prompts that guide AI to understand and execute tasks as accurately and efficiently as possible.

Navigating Through the Article

- Understanding Prompt Structure: We’ll start by breaking down the anatomy of a successful prompt. What makes some prompts more effective than others? How can you structure your prompts to get the best results?

- The Power of Specificity and Context-Setting: Learn how being specific and setting the right context can drastically change the AI’s responses. We’ll provide examples to show how minor tweaks can lead to major improvements.

- Real-World Applications: See how businesses, content creators, and developers are using well-crafted prompts to automate tasks, generate content, and enhance productivity. These examples will inspire you to apply these techniques in your own projects.

- Step-by-Step Guide with LangChain: Discover how LangChain can simplify the process of improving LLM output consistency. We’ll walk you through a step-by-step guide on using this tool to refine your prompts and achieve better results.

Style and Format

To make this journey as enjoyable as it is informative, we’ll keep the tone light and the explanations clear. Expect plenty of real-world examples, actionable tips, and step-by-step guidance. We’ll use HTML formatting to highlight key points, unnumbered lists to break down complex ideas, and bold text for emphasis. Where appropriate, WordPress CSS-styled tables will compare prompt variations or display prompt structures for different use cases, making it easier for you to visualize and apply these concepts.

So, if you’re ready to transform your interactions with AI from frustrating to fruitful, keep reading. By the end of this article, you’ll not only have a solid understanding of prompt engineering but also the tools and techniques to craft prompts that lead to more consistent, accurate, and useful AI outputs. Let’s embark on this exciting journey from chaos to clarity together!

– Unraveling the Mystery of LangChain: What It Is and Why It Matters

Diving into the heart of LangChain, it’s essential to grasp that it’s not just another tool in the AI toolbox; it’s a transformative approach to how we interact with language models. At its core, LangChain leverages the power of chaining together multiple AI models to perform complex tasks that a single model might struggle with. This methodology is groundbreaking because it allows for a modular approach to problem-solving, where different models can be “chained” together to handle specific parts of a task. For instance, imagine a scenario where one model excels at understanding natural language queries, another is adept at searching for information, and a third shines in summarizing content. LangChain enables these models to work in concert, each playing to its strengths, to achieve a result that’s greater than the sum of its parts.

To put this into perspective, consider the challenge of improving the consistency of outputs from large language models (LLMs). Before LangChain, achieving consistent and reliable results often felt like trying to hit a moving target. However, by applying LangChain’s principles, we can construct a workflow where:

- A query understanding model interprets the user’s request.

- An information retrieval model fetches relevant data or content.

- A summarization model distills this information into a concise response.

This sequence ensures that each step is handled by a model best suited for the task, significantly enhancing the overall quality and consistency of the output. Below is a simplified example of how these components can be structured using LangChain:

- Step 1: User inputs a query.

- Step 2: Query understanding model interprets the request.

- Step 3: Information retrieval model searches for relevant data.

- Step 4: Summarization model generates a concise summary of the findings.

By adopting this modular approach, not only do we streamline the process of generating high-quality outputs, but we also open up new possibilities for customizing and optimizing AI workflows. LangChain represents a significant leap forward in our ability to harness the full potential of AI models, making it a pivotal development for anyone involved in prompt engineering, content creation, or any field that relies on sophisticated AI interactions.

– Crafting the Perfect Prompt: Techniques for Enhanced LLM Output

In the quest for crafting the perfect prompt, understanding the nuances of prompt structure and specificity can significantly enhance the output of Large Language Models (LLMs) like GPT-3 or LangChain. A well-structured prompt acts as a clear guide, directing the AI to generate responses that are not only relevant but also rich in context and detail. For instance, instead of asking a vague question like “What’s the weather?”, specifying ”What’s the weather forecast for New York City on July 4th, 2023?” yields a response that’s immediately more useful and targeted. This specificity principle applies across various applications, from content creation to data analysis, where the more detailed your prompt, the more precise and actionable the AI’s response will be.

To illustrate, let’s delve into the context-setting technique, a cornerstone in prompt engineering that significantly boosts LLM output consistency. By embedding context directly within the prompt, you inform the AI not only about the ‘what’ but also the ‘why’ and ‘how’ of the task at hand. For example, when seeking to generate a market analysis report, a prompt enriched with context such as, “Generate a comprehensive market analysis report focusing on the renewable energy sector in Europe, highlighting trends, key players, and future growth opportunities based on data from 2021 to 2023,” directs the AI to produce a focused and detailed output. Below is a simple comparison table showcasing the impact of prompt specificity and context-setting on LLM output:

| Prompt Type | Example | Output Quality |

|---|---|---|

| Generic | “Write a report.” | Low – Vague and broad, lacking detail. |

| Specific | “Write a report on the renewable energy sector in Europe, 2021-2023.” | High – Detailed and focused, with clear direction. |

| Context-Enriched | “Generate a comprehensive market analysis report focusing on the renewable energy sector in Europe, highlighting trends, key players, and future growth opportunities based on data from 2021 to 2023.“ | Very High – Rich in detail, offering deep insights and actionable information. |

By incorporating these techniques into your prompt engineering practice, you can transform chaotic, unpredictable AI outputs into clear, consistent, and highly valuable responses. Whether you’re a developer, content creator, or business professional, mastering the art of prompt crafting is a powerful skill in the AI-driven world.

– Real-World Wins: Applying LangChain to Transform Your AI Projects

In the bustling world of AI development, the introduction of LangChain has been nothing short of revolutionary for those of us looking to harness the power of large language models (LLMs) more effectively. One of the most compelling applications of LangChain is its ability to transform chaotic, unpredictable AI outputs into consistent, reliable results. By leveraging LangChain’s toolkit, I’ve been able to craft prompts that not only guide the AI more precisely but also ensure that the output aligns closely with the project’s objectives. For instance, in a content generation project aimed at producing market analysis reports, the use of LangChain helped in structuring prompts that led to outputs with a uniform tone, style, and structure, significantly reducing the time spent on editing and revisions.

Here’s a breakdown of how LangChain can be applied to transform your AI projects:

- Prompt Engineering with Contextual Awareness: LangChain encourages the design of prompts that incorporate a clear understanding of the task at hand, leading to more relevant and focused AI outputs. For example, when tasked with generating technical documentation, prompts enriched with context about the specific technology, its users, and application scenarios yielded highly informative and targeted content.

- Sequence-to-Sequence Tasks Simplified: Complex tasks that involve converting one form of data into another (e.g., translating languages or summarizing long documents) benefit immensely from LangChain’s structured approach to prompt engineering. By defining clear start and end points within the prompts, the AI’s responses became markedly more coherent and on-point.

| Before LangChain | After LangChain |

|---|---|

| Outputs varied greatly in style and structure, requiring extensive manual editing. | Outputs exhibit uniformity in tone and structure, aligning with project goals. |

| Prompts often led to irrelevant or off-topic AI responses. | Prompts result in focused, relevant, and useful AI-generated content. |

By integrating LangChain into your workflow, you not only streamline the process of generating AI content but also elevate the quality and consistency of the outputs. Whether you’re developing educational materials, crafting engaging stories, or compiling research summaries, LangChain’s methodologies empower you to achieve your objectives with greater precision and efficiency. The real-world wins I’ve experienced in my projects are a testament to the transformative power of effective prompt engineering when combined with the innovative capabilities of LangChain.

– Beyond the Basics: Advanced Strategies for Maximizing LangChains Potential

Diving into the world of LangChain, I discovered that the key to enhancing the consistency of outputs from large language models (LLMs) lies in the artful crafting of prompts. This realization led me to develop a set of advanced strategies that significantly improved the quality and reliability of the responses I received. One such strategy involves the meticulous structuring of prompts to include clear, concise instructions, and context that guides the AI in generating the desired output. For instance, instead of a vague prompt like “Write an article,” I began using more structured prompts such as “Write a 500-word article on the benefits of renewable energy, aimed at high school students, including three key points with examples.” This level of specificity not only narrows down the scope of the AI’s response but also sets clear expectations for the output.

Furthermore, I experimented with incorporating feedback loops into my prompt engineering process. This involves presenting the AI with its previous outputs and asking for refinements based on specific criteria. For example, after receiving an initial draft, I might use a prompt like “Revise the article to include more recent statistics on solar energy adoption and simplify the language for a younger audience.” This iterative process encourages the model to self-correct and adapt, leading to progressively more refined outputs. Below is a simple table illustrating the difference in response quality before and after applying these advanced strategies:

| Initial Prompt | Refined Prompt | Feedback Loop |

|---|---|---|

| Write an article on renewable energy. | Write a 500-word article on the benefits of renewable energy, aimed at high school students, including three key points with examples. | Revise the article to include more recent statistics on solar energy adoption and simplify the language for a younger audience. |

By employing these techniques, not only did I manage to improve the consistency and relevance of the AI-generated content, but I also unlocked new levels of creativity and precision in the outputs. These strategies, when applied thoughtfully, can transform the way we interact with LLMs, moving from chaotic and unpredictable results to clarity and purpose-driven content creation. Whether you’re a developer, content creator, or business professional, understanding and implementing these advanced prompt engineering techniques can significantly elevate the quality of your AI-assisted projects.

Concluding Remarks

And there you have it, folks – a journey from the chaotic beginnings of prompt crafting to achieving a newfound clarity and consistency in your interactions with large language models (LLMs) through the innovative use of LangChain. Whether you’re a developer fine-tuning the next big app, a content creator looking to streamline your workflow, or a business professional seeking to leverage AI for competitive advantage, the principles we’ve explored today are your stepping stones to success.

Remember, the key takeaways are:

- Structure Your Prompts Clearly: A well-structured prompt is the backbone of effective communication with LLMs. It guides the model in understanding exactly what you’re asking for.

- Be Specific: Specificity is your best friend. The more detailed your prompt, the closer the output will match your expectations.

- Set the Context: Context-setting is crucial. It provides the model with the necessary background to generate relevant and accurate responses.

- Experiment with LangChain: As we’ve seen, LangChain can significantly improve the consistency of LLM outputs. Don’t shy away from experimenting with it to find what works best for your specific needs.

We’ve covered a lot of ground, but this is just the beginning. The field of prompt engineering is evolving rapidly, and there’s always more to learn. So, keep experimenting, keep learning, and most importantly, keep sharing your discoveries with the community. Your insights could be the key that unlocks new possibilities for someone else.

For those eager to dive deeper, don’t forget to check out the resources section at the end of this article. It’s packed with links to tutorials, research papers, and forums where you can connect with fellow AI enthusiasts and prompt engineering experts.

In closing, mastering prompt engineering is an ongoing journey of discovery and innovation. By applying the techniques we’ve discussed and embracing the power of tools like LangChain, you’re well on your way to transforming the way you interact with AI. Here’s to your success in turning chaos into clarity and unlocking the full potential of large language models!

Happy prompting!

Resources:

- LangChain Official Documentation

- Effective Prompt Engineering Techniques

- Large Language Models Forum

- Prompt Crafting Tutorials

Note: The links provided are for illustrative purposes and would lead to actual resources in a live article.