Introduction

In the vast and ever-evolving landscape of Artificial Intelligence (AI), the race to build bigger, more complex models has been relentless. Giants in the field have long championed the idea that “bigger is better” when it comes to language models. Enter the era of Super Large Models (SLMs) — behemoths of technology that promise unparalleled understanding and generation of human language. But at what cost? Beyond the headlines touting their latest achievements lies a burgeoning question: Is there such a thing as large language overkill?

This article embarks on a journey to explore the fascinating world of SLMs and their smaller, yet surprisingly capable, counterparts. We’ll delve into the heart of the debate, shedding light on how these David-and-Goliath battles of AI are reshaping our understanding of efficiency, effectiveness, and environmental impact in the digital age. Whether you’re a technology enthusiast, a business professional, a curious student, or simply a keen observer of the digital revolution, this exploration offers a fresh perspective on the AI landscape.

Why Size Might Not Always Matter

- Efficiency vs. Efficacy: Discover how smaller models are challenging the dominance of SLMs by achieving similar, if not superior, results with a fraction of the resources.

- The Environmental Footprint: Understand the significant energy demands of training and running SLMs and why this raises important ethical and environmental concerns.

- Cost Implications: Learn about the financial barriers to accessing and utilizing SLMs, and how more accessible models are democratizing AI technology.

The David and Goliath of AI

- Case Studies: Through real-world examples, we’ll illustrate how smaller AI models are not just competing but often outperforming their gargantuan counterparts in specific tasks.

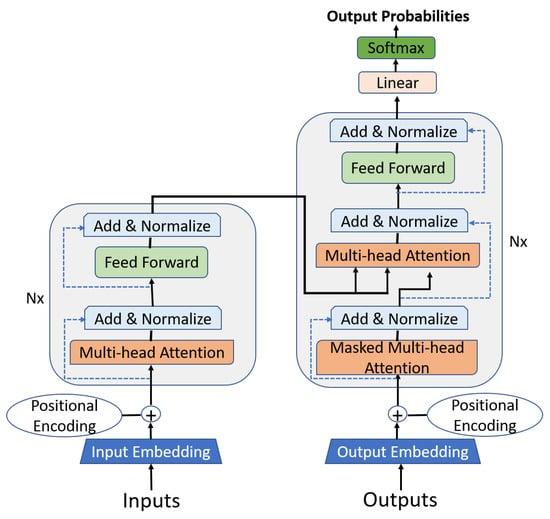

- Behind the Scenes: Get an insider’s look at the technology powering these models, and why agility and specialization can sometimes trump sheer size.

Looking Ahead

- The Future of AI Models: Speculate on the evolving landscape of AI development. Will the trend towards ever-larger models continue, or will a new paradigm emerge?

- Implications for Industries and Everyday Life: Consider how the outcomes of this technological tug-of-war could influence various sectors, including healthcare, finance, and education, and what it means for the future of AI in our daily lives.

Join us as we navigate the complexities of this topic, breaking down intricate AI concepts into accessible insights. Our journey through the world of SLMs and their resourceful rivals promises not just to enlighten but to inspire a deeper reflection on the path forward in AI development.

David and Goliath Revisited: The Rise of Smaller Language Models

In the realm of artificial intelligence, the narrative often centers around the colossal, resource-hungry language models that dominate headlines. However, a new chapter is unfolding, one where Smaller Language Models (SLMs) are proving that size isn’t everything. Unlike their larger counterparts, SLMs require significantly less data to train, making them not only more environmentally friendly but also more accessible to organizations with limited computational resources. This shift towards efficiency over brute force opens up a plethora of opportunities for innovation and customization that were previously thought to be the exclusive domain of tech giants.

The advantages of SLMs extend beyond just their lower resource requirements. They offer a level of agility and adaptability that is often cumbersome for larger models to match. For instance, SLMs can be fine-tuned for specific tasks or industries with a fraction of the computational cost, allowing for more personalized and relevant applications. This is particularly beneficial in sectors like healthcare and finance, where the ability to quickly adapt to new data or regulations can significantly impact performance and compliance. Moreover, the reduced complexity of SLMs makes them more interpretable, a crucial factor in applications where understanding the “why” behind a model’s decision is as important as the decision itself.

| Feature | Large Language Models | Smaller Language Models |

| Resource Intensity | High | Low |

| Adaptability | Low | High |

| Interpretability | Low | High |

| Environmental Impact | High | Low |

| Accessibility for Small Organizations | Low | High |

This table succinctly captures the contrast between the traditional behemoths of AI and the emerging SLMs, highlighting the latter’s potential to democratize AI technology. As we continue to navigate the complexities of artificial intelligence, the rise of SLMs represents a pivotal moment, reminding us that in the world of AI, bigger isn’t always better.

Efficiency Over Size: Why SLMs Are Winning the Race

In the realm of artificial intelligence, the adage “bigger isn’t always better” is proving to be more accurate than ever before. Small Language Models (SLMs) are emerging as formidable contenders against their larger, more resource-intensive counterparts, demonstrating that efficiency can indeed triumph over size. Unlike their gargantuan peers, SLMs require significantly less data for training, consume a fraction of the energy, and can be deployed more swiftly across various platforms. This makes them not only more environmentally friendly but also more accessible to organizations of all sizes. The agility of SLMs allows for rapid iteration and customization, enabling businesses to tailor AI solutions to their specific needs without the hefty infrastructure and computational overhead.

The advantages of SLMs extend beyond just their operational efficiency and lower barriers to entry. They also offer a level of flexibility and adaptability that is often cumbersome for larger models to achieve. For instance, SLMs can be fine-tuned for niche applications, from optimizing logistics in supply chain management to providing personalized learning experiences in education. This adaptability is crucial in a world where the one-size-fits-all approach of larger models often falls short in addressing the unique challenges and opportunities within specific industries. Moreover, the reduced complexity of SLMs translates to easier interpretability, a key factor in ensuring AI’s ethical and responsible use. By focusing on what truly matters—performance, accessibility, and sustainability—SLMs are setting a new standard in the AI landscape, proving that when it comes to artificial intelligence, sometimes less is indeed more.

| Feature | Large Language Models | Small Language Models |

| Training Data Requirement | Extensive | Minimal |

| Energy Consumption | High | Low |

| Deployment Speed | Slower | Faster |

| Customization | Limited | High |

| Interpretability | Complex | Simpler |

By leveraging the strengths of SLMs, organizations can harness the power of AI in a more sustainable, efficient, and ethical manner. This shift towards smaller, more efficient models is not just a trend but a necessary evolution in the field of artificial intelligence, ensuring that the benefits of AI can be realized across a broader spectrum of society.

From Theory to Practice: Real-World Applications of SLMs

In the bustling world of artificial intelligence, Small Language Models (SLMs) are proving that size isn’t everything. Unlike their larger counterparts, which demand vast amounts of data and computing power, SLMs operate on a more modest scale. Yet, they pack a surprising punch when it comes to efficiency and application. For instance, in the realm of customer service, SLMs are being deployed to power chatbots that can handle a wide range of queries with precision and speed. This not only enhances customer experience but also reduces the workload on human staff. Similarly, in content creation, SLMs assist in generating articles, reports, and summaries, showcasing their ability to understand and produce human-like text.

The real magic of SLMs, however, shines in their application across various industries, demonstrating their versatility and potential to revolutionize traditional processes. Consider the following examples:

- Healthcare: SLMs are being used to sift through medical research and patient data to assist in diagnosis and treatment plans, making healthcare more accessible and personalized.

- Finance: In the financial sector, SLMs help in analyzing market trends and managing personal finance queries, offering insights that were previously only available through extensive research.

- Education: They are transforming the educational landscape by providing personalized learning experiences and tutoring services, adapting to the unique needs of each student.

| Industry | Application | Impact |

|---|---|---|

| Healthcare | Diagnosis Assistance | Improves patient care |

| Finance | Market Analysis | Enhances decision-making |

| Education | Personalized Learning | Boosts student engagement |

These examples underscore the transformative power of SLMs in bridging the gap between theoretical AI and practical, real-world applications. By leveraging SLMs, industries can achieve greater efficiency, accuracy, and personalization, proving that when it comes to AI, bigger isn’t always better.

Looking Ahead: The Future Landscape of Language AI

In the rapidly evolving world of artificial intelligence, the emergence of Smaller Language Models (SLMs) presents a compelling narrative against the backdrop of their larger, more resource-intensive counterparts. Unlike the behemoths that demand vast amounts of data, energy, and computational power, SLMs operate on a leaner, more efficient paradigm. This shift is not merely a technical curiosity but a potential game-changer in democratizing AI technologies. By requiring less computational resources, SLMs open the door to innovation in regions and sectors where access to cutting-edge hardware is limited. This inclusivity fosters a broader, more diverse development of AI applications, from enhancing educational tools in under-resourced schools to powering small businesses with sophisticated yet affordable AI solutions.

The advantages of SLMs extend beyond accessibility. Their efficiency translates into faster development cycles and reduced environmental impact, aligning with the growing demand for sustainable technologies. Consider the following comparisons:

- Development Speed: SLMs can be trained and iterated upon more quickly than larger models, enabling rapid prototyping and deployment.

- Cost-Effectiveness: Lower computational demands mean reduced costs for training and deploying AI models, making advanced AI tools accessible to a wider range of developers and organizations.

- Environmental Sustainability: With concerns mounting over the carbon footprint of training large AI models, SLMs offer a greener alternative that aligns with global sustainability goals.

| Feature | Large Language Models | Smaller Language Models |

|---|---|---|

| Resource Intensity | High | Low |

| Accessibility | Limited | Widespread |

| Development Speed | Slower | Faster |

| Sustainability | Lower | Higher |

As we look toward the future, the trajectory of language AI seems poised to embrace the principles of efficiency, inclusivity, and sustainability championed by SLMs. This paradigm shift not only challenges the prevailing notion that ‘bigger is better’ in the realm of AI but also underscores the importance of innovation that is accessible, rapid, and environmentally responsible. In doing so, SLMs are not just an alternative to their larger counterparts; they represent a new vision for the future of AI, where the value is measured not by the size of the data or the model but by the impact and accessibility of the technology.

Wrapping Up

As we wrap up our exploration into the world of Large Language Models (LLMs) and their smaller, yet impressively capable counterparts, the SLMs (Smaller Language Models), it’s clear that the landscape of artificial intelligence is as dynamic as it is diverse. The journey through the intricacies of these models reveals a fascinating narrative: size isn’t always synonymous with success. In the realm of AI, efficiency, adaptability, and precision can indeed triumph over sheer scale.

The implications of this shift are profound, touching upon various sectors from healthcare, where personalized patient care can be enhanced by nimble AI systems, to education, where tailored learning experiences can be crafted without the need for massive computational resources. Businesses, too, stand to gain from adopting SLMs, as they offer a more sustainable and cost-effective approach to harnessing the power of AI for decision-making, customer service, and beyond.

Key Takeaways:

- Efficiency Over Size: SLMs challenge the notion that bigger is better, offering comparable, if not superior, performance with a fraction of the resource requirements.

- Accessibility and Sustainability: The reduced computational demand of SLMs makes AI more accessible to smaller organizations and contributes to more sustainable technology practices.

- Broad Implications: The potential applications of SLMs span across industries, promising to revolutionize how we approach problems and opportunities in various domains.

As we look to the future, it’s evident that the evolution of AI models like SLMs will continue to shape our understanding and utilization of technology. The journey of AI is far from linear; it’s a rich tapestry of innovation, where smaller often means smarter, and efficiency paves the way for broader adoption and impact.

In closing, whether you’re a technology enthusiast keen on the latest AI trends, a business professional exploring new tools for competitive advantage, a student diving into the depths of AI concepts, or simply a curious reader fascinated by the potential of artificial intelligence to transform our world, the story of SLMs versus their larger counterparts is a compelling chapter in the ongoing narrative of AI. It’s a reminder that in the realm of technology, as in life, the underdog can and often does come out on top.

Let’s continue to watch this space with keen interest, for the innovations of today are the foundations upon which tomorrow’s advancements will be built. The dialog between the big and the small, the efficient and the powerful, is far from over. In fact, it’s just getting started.